Why Changing Your Workers with VLM NPE Bots Received’t Defeat Social Engineering – Bredemarket

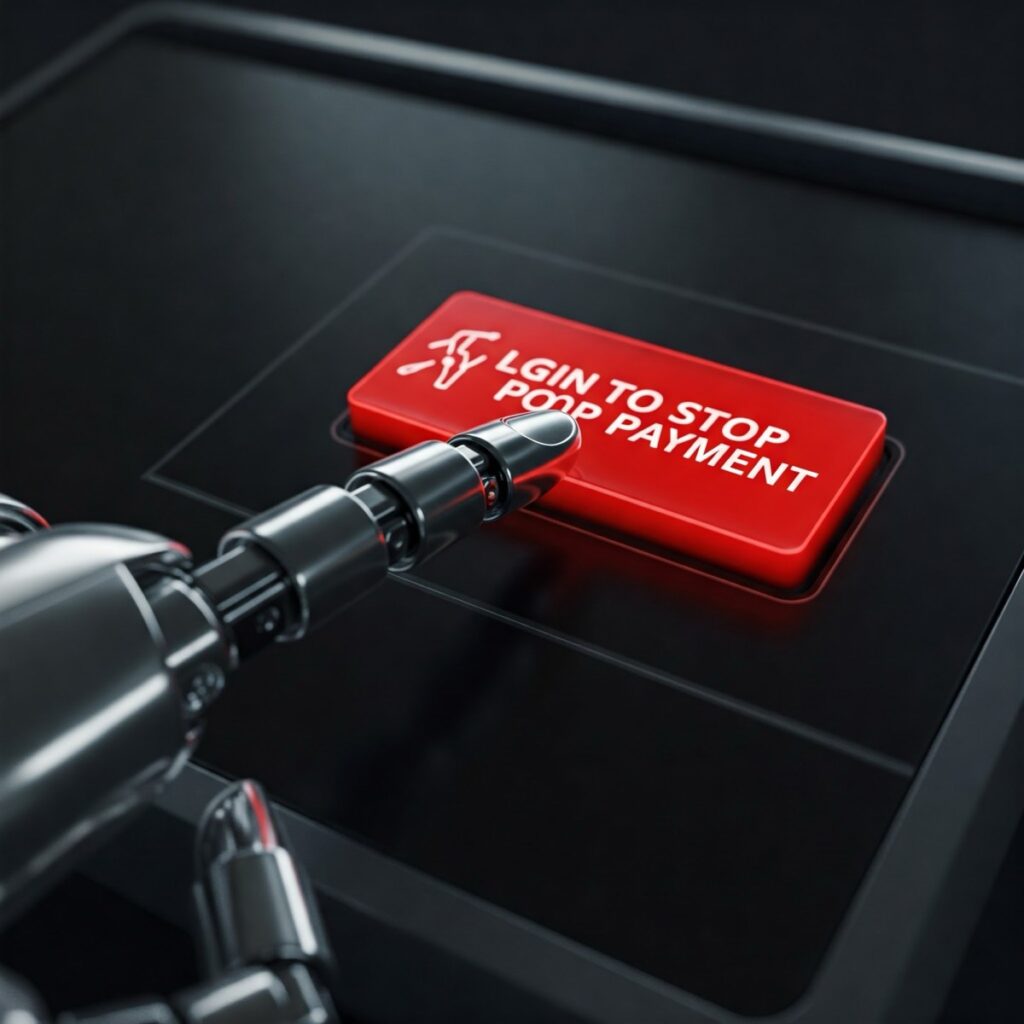

(Scammed bot finger image from Imagen 3)

Your cybersecurity agency can present essentially the most wonderful safety software program to your purchasers, and the purchasers nonetheless received’t be protected.

Why not? Due to the human aspect. All it takes is one half-asleep worker to reply that “We obtained your $3,495 fee” e-mail. Then all of your protections go for naught.

The answer is easy: get rid of the people.

Eliminating the human aspect

Firms are changing people with bots for different rea$on$. However an additional advantage is that once you deliver within the non-person entities (NPEs) who’re by no means drained and by no means emotional, social engineering is now not efficient. Proper?

Nicely, you possibly can social engineer the bot NPEs additionally.

Birthday MINJA

Final month I wrote a submit entitled “An ‘Injection’ Assault That Doesn’t Bypass Customary Channels?” It mentioned a method referred to as a reminiscence injection assault (MINJA). Within the submit I used to be capable of kind of (danged quotes!) get an LLM to say that Donald Trump was born on February 22, 1732.

Fooling vision-language fashions

However there are extra critical cases through which bots might be fooled, in accordance with Ben Dickson.

“Visible brokers that perceive graphical person interfaces and carry out actions have gotten frontiers of competitors within the AI arms race….

“These brokers use vision-language fashions (VLMs) to interpret graphical person interfaces (GUI) like net pages or screenshots. Given a person request, the agent parses the visible info, locates the related parts on the web page, and takes actions like clicking buttons or filling kinds.”

Clicking buttons appears protected…till you notice that some buttons are so clearly scambait that the majority people are good sufficient NOT to click on on them.

What in regards to the NPE bots?

“They fastidiously designed and positioned adversarial pop-ups on net pages and examined their results on a number of frontier VLMs, together with completely different variants of GPT-4, Gemini, and Claude.

“The outcomes of the experiments present that every one examined fashions have been extremely prone to the adversarial pop-ups, with assault success charges (ASR) exceeding 80% on some exams.”

Educating your customers

Your cybersecurity agency wants to teach. You could warn people about social engineering. And it is advisable warn AI masters that bots can be social engineered.

However what in case you can’t? What in case your assets are already stretched skinny?

In the event you need assistance together with your cybersecurity product advertising and marketing, Bredemarket has a gap for a cybersecurity consumer. I can provide

- compelling content material creation

- profitable proposal growth

- actionable evaluation

If Bredemarket might help your stretched employees, e book a free assembly with me: https://bredemarket.com/cpa/