Does Hallucination Indicate Sentience? – Bredemarket

Final month Tiernan Ray wrote a bit entitled “Cease saying AI hallucinates – it doesn’t. And the mischaracterization is harmful.”

Ray argues that AI doesn’t hallucinate, however as an alternative confabulates. He explains the distinction between the 2 phrases:

“A hallucination is a acutely aware sensory notion that’s at variance with the stimuli within the setting. A confabulation, however, is the making of assertions which might be at variance with the information, comparable to “the president of France is Francois Mitterrand,” which is at present not the case.

“The previous implies acutely aware notion, the latter could contain consciousness in people, however it might probably additionally embody utterances that don’t contain consciousness and are merely inaccurate statements.”

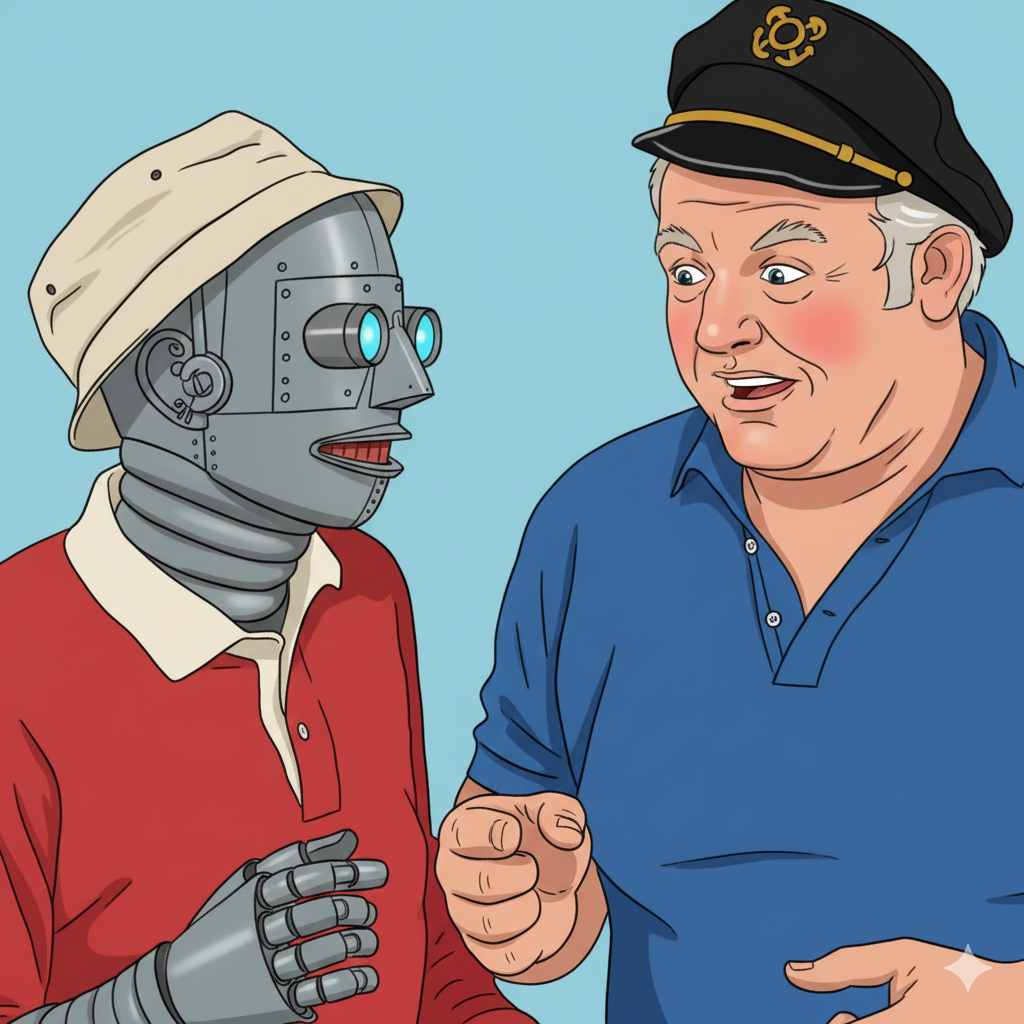

And if we deal with bots (comparable to my Bredebot) as sentient entities, we will get into all kinds of bother. There are documented instances by which folks have died as a result of their bot—their little buddy—advised them one thing that they believed was true.

In any case, “he” or “she” mentioned it. “It” didn’t say it.

Right now, we regularly deal with actual folks as issues. The a whole bunch of 1000’s of people that had been let go by the tech corporations this 12 months are mere “cost-sucking sources.” In the meantime, the AI bots who’re generally known as upon to exchange these “sources” are handled as “beneficial companions.”

Are we endangering ourselves by treating non-person entities as human?