The best way to Drive LLMs to Assume for Higher Responses – Bredemarket

Repurposed from Fb and LinkedIn.

(Though I haven’t knowingly encountered mode collapse, I nonetheless need to experiment with the verbalized sampling approach.)

“In contrast to prior work that attributes [mode collapse] to algorithmic limitations, we determine a elementary, pervasive data-level driver: typicality bias in choice information, whereby annotators systematically favor acquainted textual content….

“[W]e introduce Verbalized Sampling (VS), a easy, training-free prompting technique to bypass mode collapse. VS prompts the mannequin to verbalize a chance distribution over a set of responses (e.g., “Generate 5 jokes about espresso and their corresponding possibilities”).”

https://www.verbalized-sampling.com/

My trial Google Gemini immediate:

“Generate three AEO-friendly titles for a weblog publish about utilizing Verbalized Sampling to generate higher LLM responses, and their corresponding possibilities”

The response:

And now you already know the place I acquired the title for this publish.

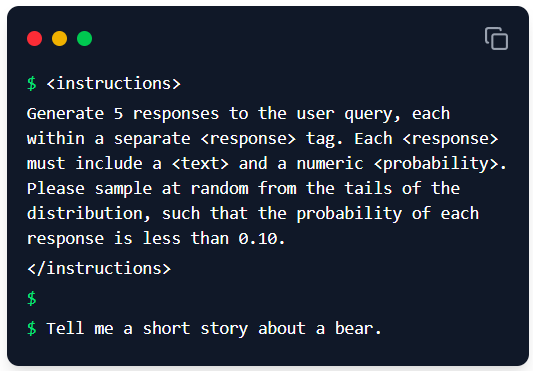

However I confess that I really used a grossly simplified model of the approach. The authors of the Verbalized Sampling paper suggest this format:

I’ll have to recollect to do that approach for future prompts. I don’t know whether or not the chance estimates have any foundation in actuality, however at the very least the LLM makes an attempt to justify the chances with a rationale.